Technomachos Ex Machina

Alazon Scribes and The Crisis of Authenticity

For to be ashamed is to admit one’s faults, but to have no shame is to add to them.

— Seneca

technomachos → tekh-no-MÁ-khos

Author’s Disclosure: Claude Sonnet 4 was used to source some of the research material for this post, particularly on classical Greek theater. Those sources can be found in the footnotes.

Author’s Disclaimer

This post reflects my personal opinions and commentary based on publicly available information, direct quotations, screenshots of AI-detector results, and my own research. AI-detector scores are probability estimates and do not prove authorship. Any statements about possible AI assistance, writing patterns, or overlaps with other works are my interpretations and should not be taken as statements of fact. I make no claims of fraud or wrongful conduct. Where I discuss motives, methods, or intent, those statements are my personal views and should be read as opinion only. Readers are encouraged to review the evidence and reach their own conclusions.

Exemplar Ex Machina

For years, we've heard the ominous threats that Artificial Intelligence poses to society, culture, mechanized warfare, and entire industries that will most likely render human labor redundant in favor of machine "labor" to maximize capital returns.

Every single day, hundreds of companies, services, applications, and platforms—either powered by artificial intelligence or connected to existing AI APIs (Application Programming Interfaces)—are conceived in the minds of the techno-hustlers. Each hustler is seeking that niche market that will capture the human desire for machine attention or affirmation (AI’s default state is to please the user) and lure investors to bet on their speculative ventures.

Welcome to the AI Bubble.

As with every techno-solutionist freight train racing down the tracks with no conductor, the question of what it means to be human, or the litany of tertiary effects this speculative bubble and the billions fueling it will have on humanity, is hardly an afterthought. There’s money to be made for the evangelists hailing the latest innovation toward “progress,” consequences be damned.

The resultant concerns of this “progress” range from a greater depression amid widespread unemployment (this is why UBI is being pushed) to emerging cases of psychotic breakdowns from interacting with chatbots now being diagnosed as “GPT-Psychosis.” Hovering over all of humanity is the worst possible scenario—a rogue digital deity emerges from some SoftBank-OpenAI-Microsoft-NSA water-cooled server farm in Utah that uses up all the water runoff from the Wasatch Mountains, killing off most of the nearby Mormon population through dehydration.

I love Mormons too! I told you it was the worst possible scenario.

This digital deity magically and coincidentally has the same aims and goals as the depopulationists of the global Rockefeller-Rothschild cult of death and decides ALL humans are redundant and non-essential, ushering in what theological eschatologists either warn about or fantasize about (depending on their sick fetishes)—an end times outcome fueled by a false god, or in the case of an AI-pocalypse, a deus ex machina.

The term deus ex machina means "god from the machine" in Latin, but originates from the Greek theos ek mêchanês, which described a theatrical convention where actors playing gods were mechanically lowered onto the stage via a crane-like device called a mechane or mêchanê.1

Used primarily in 5th-century BC Greek tragedies, the mechane was a wooden crane operated from behind the skene (stage building). Gods would appear to intervene in later acts of the tragedies to resolve conflicts that seemed impossible for human characters to solve.

Aristotle criticized this device in his Poetics, arguing that plot resolutions should arise from the story's internal logic rather than external intervention.

The solution of the plot, too, should arise out of the plot itself, it should not be brought about by the Deus ex Machina—as in the Medea, or in the Return of the Greeks in the Iliad. The Deus ex Machina should be employed only for events external to the drama—for antecedent or subsequent events, which lie beyond the range of human knowledge, and which require to be reported or foretold; for to the gods we ascribe the power of seeing all things.2

The Divine machine is back, and in full use by likely artificial storytellers who lack creativity, imagination, and for whom crafting original works is a conflict that can only be resolved by outsourcing them to machines. These “storytellers” are, in my view, mostly adept at manifesting dollar signs in their eye sockets at the sight of financial opportunity.

In this case, the AI mêchanês intervene where the human is likely incapable of crafting anything. That doesn’t mean he isn’t capable of instructing the machine on what story to craft (prompt) or quantifying the value of digital content. His philosophy is simple: I can use this machine to triple my “creative content” output and at least double my revenue without my followers knowing.

He is a wizard at scheming with the machine, to enrapture the increasingly attention-deficient masses, spellbound by flipping their black mirrors with their fingers upwards every twenty seconds to overstimulate their mesolimbic pathways, thereby degrading their orbitofrontal cortex, ventral striatum, and cerebellum, responsible for executive decision making and prospective memory.3 This is how humans degrade themselves and become cognitively more dull—relying on machines for passive amusement, but also for all the simple neurological processes that were once essential to survive and thrive and required regular engagement to stay fit.

Not a minute passes lately when the masses are not bombarded by an AI-edited video with giant auto-created misspelled subtitles in brilliant yellow, diverting the viewer away from the obvious artificiality of the speaker and its voice. The speaker is genderless, so it’s an its, and not a his or her voice, even if appearances may deceive the average viewer, not tuned in enough to discern artificiality from human authenticity.

And it really doesn’t take much to deceive the average viewer these days. Perhaps there’s something innate within humans that explains how they’ve always enjoyed playing a central role in their own deception, possibly embracing an unconscious aversion to reality. This is a global phenomenon, though one would be forgiven for detecting a particular susceptibility among Americans, for whom artificiality and superficiality have always been a special preference over reality.

Daniel Boorstin wrote of Americans in 1962 during the rise of mass media and the early stage planning of “moon landings” to be filmed from the Nevada desert:

We risk being the first people in history to have been able to make their illusions so vivid, so persuasive, so 'realistic' that they can live in them.

We suffer primarily not from our vices or our weaknesses, but from our illusions. We are haunted, not by reality, but by those images we have put in place of reality.4

Around the same time, Canadian Marshall McLuhan coined his popular phrase, “The medium is the message,” which became a mainstream marketing slogan for the changing times and earned him invitations to popular TV talk shows, including The Dick Cavett Show (1970).

During the 1960s, McLuhan's media theory was flattened into a bumper-sticker slogan that people could show off at cocktail parties without understanding. Ironically, the medium (mass media) transformed McLuhan's message into the kind of superficial image Boorstin warned about. Americans absorbed McLuhan performing his ideas on talk shows, where his shallow yet persuasive performances mattered more than the substance of his theory.

Artificially Artifical Artifices

Artificial didn’t always mean ‘fake’ or ‘phony’ or a substitute for “the real thing.” The word took on many transformations from "skillfully crafted" to "fake" and back again, but began with medieval Christian thought, which naturally assumed only natural things could be God's creation. In what may seem strange today, artifice was a combination of “craftman” and “skilled” because it was ascribed to a work created by humans, from the combination root meaning of the word's Latin origins. For Christendom, it became associated with worldly vanity by denying the natural.

During the Renaissance, artistic skill was linked with "artful deception,” our first pivot toward a new meaning for the word. Artists creating convincing illusions, which connected artificiality with intentional trickery, even when admired. Keep this italicized sentiment in mind as you venture further through this post, Good Citizens.

By the 18th-19th century Romantic Movement, nature's moral and aesthetic superiority, valorizing "natural" emotions and landscapes over "artificial" social conventions, returns for a brief spell. This was historical pivot number three for the word’s meaning.

The Industrial Revolution completed the fourth semantic shift by mass-producing inferior artificial substitutes for traditional handcrafted goods, transforming "artificial" into a label of cheap, low-quality replacements rather than skilled “originals.”

Consumer culture reinforced this through artificial flavors, colors, and materials developed as cost-cutting measures to maximize profits while poisoning humans. In medical and scientific contexts, artificial limbs and organs were seen as replacements necessitated by deficiency rather than enhancements. Through these accumulated associations, the original Latin sense of ‘expert human creations’ gave way to our contemporary understanding of artificial, as deceptive imitation.

Interestingly, we arrive at pivot number five for the meaning of the word, with the emergence of "artificial intelligence," by returning closer to the original sense of sophisticated human construction, since computers and programming languages are “crafted” by humans. This takes us back to Medieval Christendom, and the vanity of human creations denying the natural creations of God, and deceiving humans with the illusion of God’s creations. Instead of this deception playing out via oil on canvas works of master artists, it is now funneled directly to black mirrors glued to the hands of the eager-to-be-deceived masses.

Nearly a millennium later, Christians find themselves confronting the same fundamental anxieties of Medieval times. Rock stars, movie stars, politicians, oligarchs, gurus, and influencers have already saturated the space of false idols for over a century, moving people away from God. It’s not surprising then to hear devout Christians speak of AI as a kind of Anti-Christ or worry about the emergence of new false idols that will absorb all of the previously human (vain, greedy, corrupt, and superficial) false idols.

…connected artificiality with intentional trickery, even when admired.

Automating Self-Deception

There’s that viral video of the handyman who walks into a Home Depot in Anytown, America, and approaches the young cashiers at the front of the store for a quick troll: “Do any of you know where I can find a left-handed hammer?”

The three earnest Zoomers manning (and womanning) the self-checkout area (where customers now provision their own labor for billion dollar corporations for the pleasure of adding to their profit margins) respond exactly how most young people instinctively react to anything ending in a question mark—they turn to the most powerful tool that no hardware stores stock, they reflexively rely on their all-powerful gray matter inside their skulls to ponder the query dumb phones, as most humans now do.

Trolling aside, some of you might argue that these helpful kids were merely looking up which aisle they might find hammers within the store where they worked. But I worked that job in the 1990s for a year before dumb phones came along, and as the first point of contact (and last) with customers near the front of the store, I had to memorize all the aisle numbers from lumber to hardware, to plumbing, to gardening. I could answer any question about the location of an item within the store while scanning another customer’s items without even looking up.

“Excuse me, where are your three-quarter-inch PVC elbow fittings?”

“Aisle 8, behind you about halfway up on the right side.”

Working memory.

Using one’s brain.

The practice of shutting down the brain and outsourcing human thought to proxies is nothing new. In the analog era, newspapers, government bulletins, press releases, books, and gossip preceded the mass media surge of radio announcements, televised press conferences, and presidential addresses to the nation.

With Artificial Intelligence now saturating the digital ether, the capacity for human–machine deception—that is, the aim of humans to use machines to intentionally deceive other humans with video, images, or machine-produced text—is skyrocketing.

This image below recently went viral on Facebook.

In a world racing toward mass illiteracy, will humans ever know what Chicken Sands or Orebarger Rings are? Will they even care?

I love a good inspirational story that is deeply compassionate and human, and can pull on our collective heartstrings, but my mind exists to keep those heartstrings from pulling my chain.

The artificiality of something is never repulsive so long as people are moved by it first, and as has always been the case with conditioned humans, the whistleblower who shatters their illusions with the truth will receive more of their wrath than the human who fabricated the source of their deception. Liars and hacks reap the rewards of their deliberate deception, while those who expose them are shamed.

As Gustave Le Bon wrote in The Crowd: A Study of the Popular Mind (1895):

Crowds do not reason; they merely react. Whoever can supply them with illusions is easily their master; whoever attempts to destroy their illusions is always their victim. (Ch. II)

Crowds have never thirsted after truth. They demand illusions, and cannot do without them. They constantly give the unreal precedence over the real, and they prefer to worship error if error seduces them. (Ch. III)

In our digital epoch saturated with artificiality, people want so desperately to believe in anything, they’ll fortify their own self-deception to capture the fleeting neurological rewards of something entirely fabricated.

For the deceivers, whether a Nigerian Prince or a cam-girl trafficking pimp in Romania, there is always another dollar that can be squeezed from easy marks. Keep this sentiment in mind, highly literate, critical-thinking Good Citizens, as you venture further through this piece of human-created content toward the human-machine merging punchline of potentially delicious irony.

Deception is hardly in the analog or digital shadows anymore. Tens of millions of hours of artificial content slop are uploaded to “social” feeding troughs every single day. Nearly every artificial digital “creation” is rooted in the spark of human imagination and the sweat and toil of human creation, going back throughout all of recorded history, official or subverted.

When it comes to storytelling, these machines have virtually no capacity for either creativity or originality—only for regurgitation. Any idea that manifests by prompt will have been pilfered from the existing canon of human-produced works. The human doing the prompting doesn’t require a deeply rooted foundational knowledge of the subject, only a few PDF files as books or articles on the subject, and a machine-created outline. Anyone with a morsel of gossip and some rudimentary instructions for a machine can rebrand themselves as an “expert” and now “write” a “book.”

Soon all “content” will simply be an automated (API) pixelized exemplar ex machina (copy from machines) utilized by millions of people with assembly line accounts, chasing advertising scraps, book sales, or clout by programming AI feeds to remanufacture something that was already a copy of a copy of a copy of a rewritten Wikipedia article about a human created work. It will all be flushed into the digital ether ad infinitum.

We’re entering a strange period of “technological progress” where machines and their programmers are attempting to imitate the intricacies and subtleties of written human communication, without the intelligence to recognize that they cannot emulate the emotion and substance of it. The machine has a few formulas it follows, and that’s it. Programmers are hardly creative minds, and cannot comprehend the disconnect these machines produce in attempting to replicate human thought and creativity.

Perhaps both programmers and humans behind the production of content—the end users—do not care if machine-produced works even sound human at all. They have a deadline to meet for their “followers,” and monetary expectations will not wait for corrections, accuracy, or quality to pass off the work as either “creative” or “authentic” to their followers.

Rise of The Alazon

That automated AI avatars are churning out one dopey video after another is nauseating enough. But watching humans likely pass off entire essays of machine-generated writing as their own is far more detestable.

Substack is crowded with both anonymous accounts and self-branded “writers” who believe the craft is not in assembling (negation alert!) an original sequence of words no human has ever combined before, that will be thought-provoking or stimulating, but most likely, in prompting a machine to remix the thoughts of others using a hideously formulaic methodology while stamping their name on the result.

Shame is a small inconvenience when collecting money for content expediency. As La Rochefoucauld observed, “We are never so ridiculous for what we are as for what we pretend to be.”5

Just as the Greeks had the phrase theos ek mêchanês, the Greeks had a word for these possible pretenders: Alazon (ἀλάζων).

The Alazon was a stock character in Greek comedy, representing the classic impostor and braggart who falsely claimed to possess knowledge or skills they didn't actually have.6 These theatrical goofs would swagger onto the stage, boasting of their expertise in one subject or another, trying to convince the other characters of their authenticity. But the Alazon's fate was always inevitable, to the delight of audiences, with the Alazon's downfall dramatically satisfying.

Sadly, our world today is not a reflection of the classical Greek comedy. The Alazons are most likely rising everywhere, taking money and delight in fooling their audiences, which brings us to the central dilemma of this tale: Do humans even care if they’re reading the words of a machine or a human?

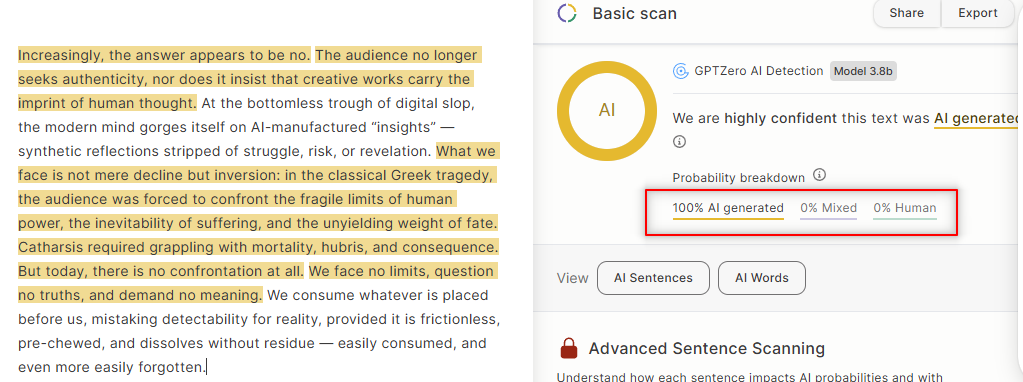

Increasingly, the answer appears to be no. The audience no longer seeks authenticity, nor does it insist that creative works carry the imprint of human thought. At the bottomless trough of digital slop, the modern mind gorges itself on AI-manufactured “insights”—synthetic reflections stripped of struggle, risk, or revelation. What we face is not mere decline but inversion: in the classical Greek tragedy, the audience was forced to confront the fragile limits of human power, the inevitability of suffering, and the unyielding weight of fate. Catharsis required grappling with mortality, hubris, and consequence. But today, there is no confrontation at all. We face no limits, question no truths, and demand no meaning. We consume whatever is placed before us, mistaking detectability for reality, provided it is frictionless, pre-chewed, and dissolves without residue — easily consumed, and even more easily forgotten.

Detecting Alazon Scribes

If you’ve been working with AI or LLMs (Large Language Models) in any capacity the past few years, you probably just recognized the above paragraph that concluded the previous section, the one that begins with the word “Increasingly…”, as suspicious in terms of integrating with the previous paragraphs you’ve already read within this piece.

Congratulations on noticing, because it was written by a machine, in this case GPT-5.

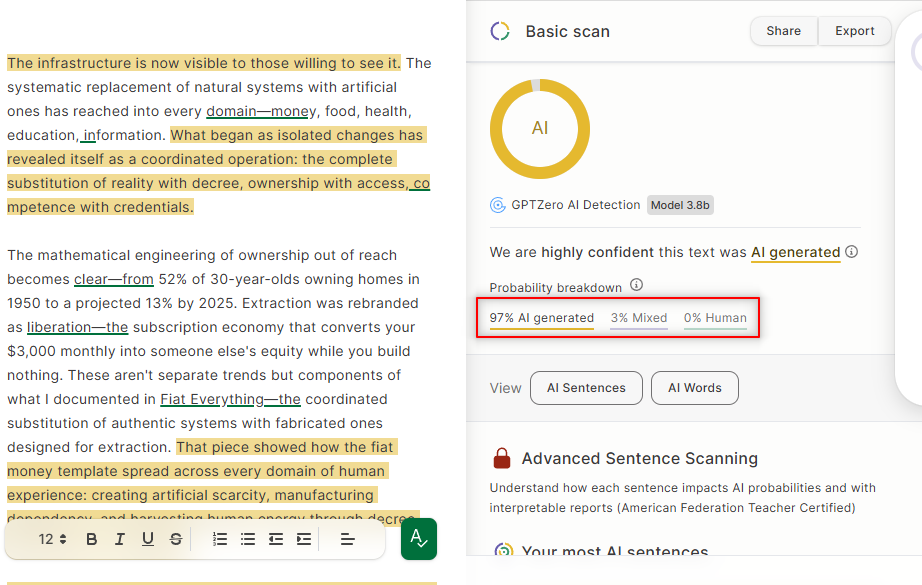

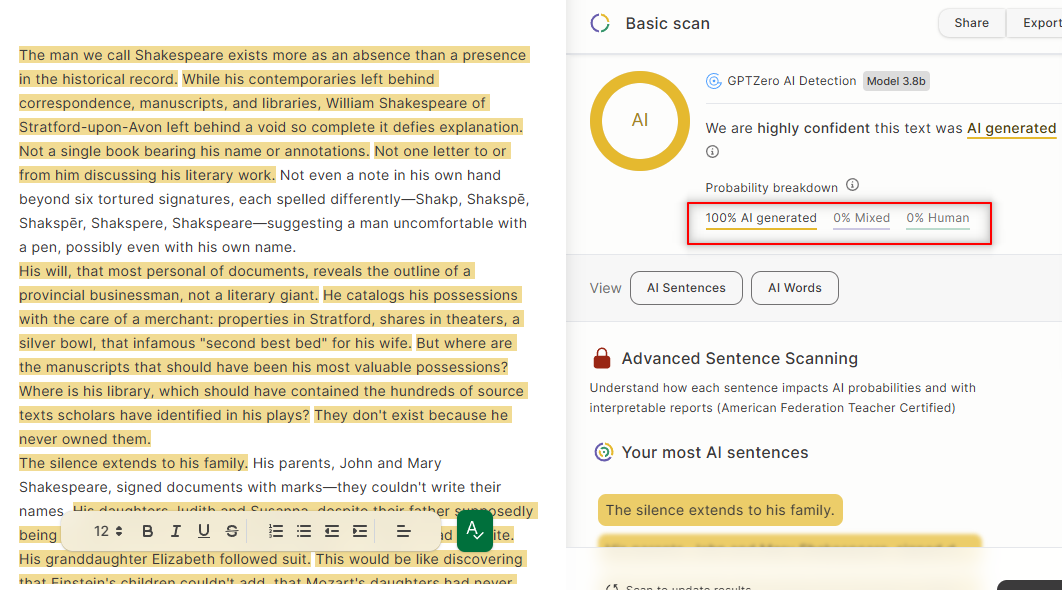

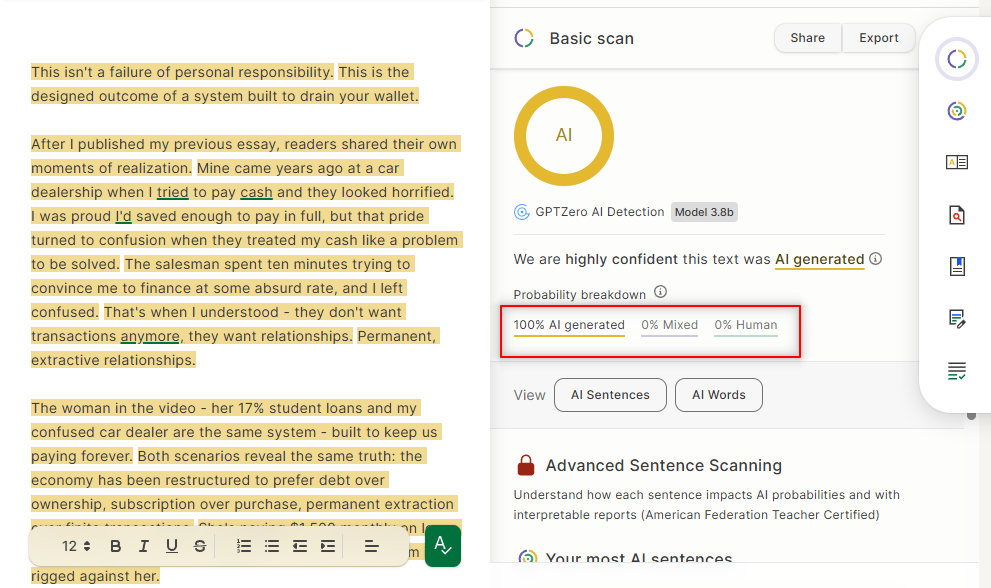

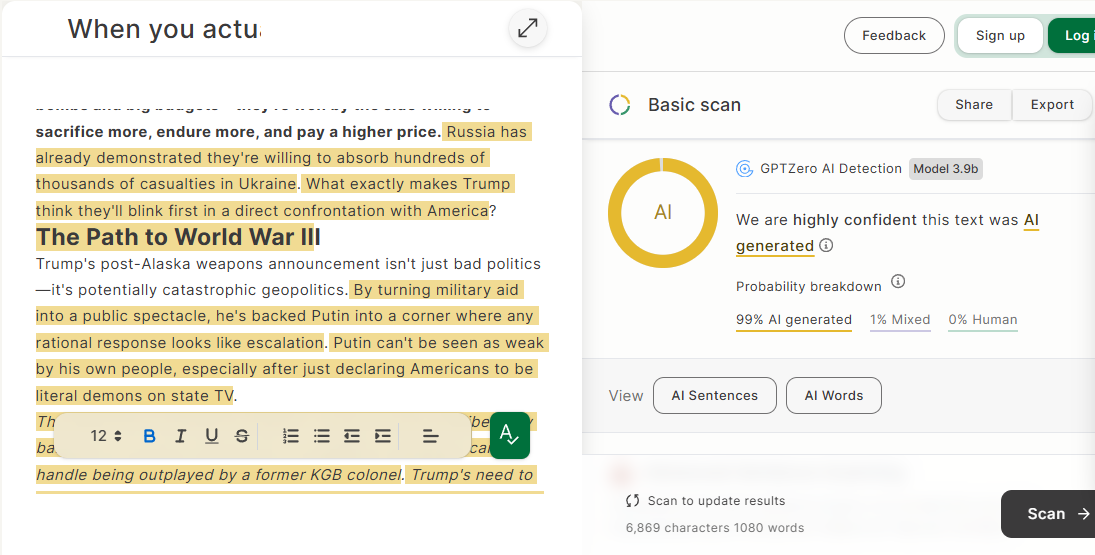

When pasted into an AI-detector like GPT-Zero (gptzero.me), the machine easily recognizes with 100% certainty that another machine wrote those words. Please note for comprehension of the rest of this post, that this is a probability estimate for the likelihood that some text was written by AI, but not necessarily ALL text. For greater clarity, it DOES NOT refer to editing or polishing. If it did, it would say “lightly edited by AI” and the colors would be a mix of green (human) and yellow (machine), rather than “AI Generated” as you see in the image below.

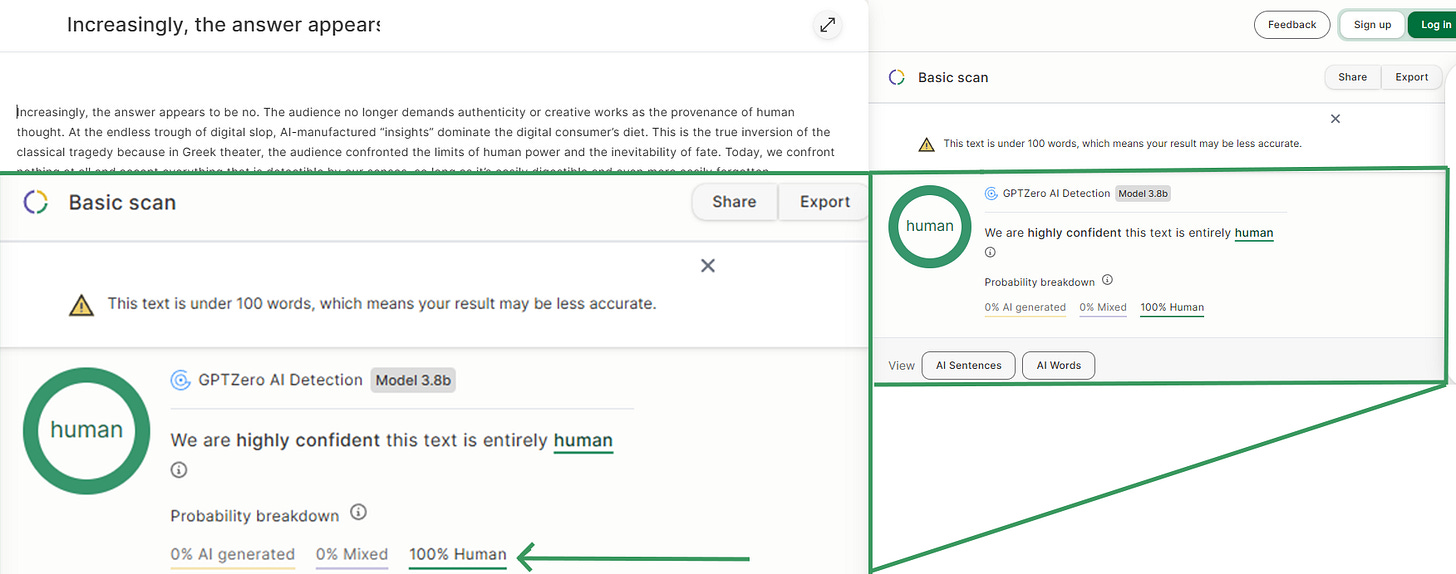

Here was my human-created version of that paragraph that I asked AI to rewrite with no further instructions. (Note: I also pasted the previous paragraph for AI to have context for continuity.)

Increasingly, the answer appears to be no. The audience no longer demands authenticity or creative works as the provenance of human thought. At the endless trough of digital slop, AI-manufactured “insights” dominate the digital consumer’s diet. This is the true inversion of the classical tragedy because in Greek theater, the audience confronted the limits of human power and the inevitability of fate. Today, we confront nothing at all and accept everything that is detectible by our senses, so long as it’s easily digestible and even more easily forgotten.

When pasted into an AI-detector, in the case of the research for this post, GPT-Zero (gptzero.me), the machine easily recognizes that my words were not written by another machine with 100% probability.

The differences are stark, even for a small sample size of words. Mine is honest and direct without being pretentious or overloading the paragraph with too much metaphor or clever language. I’ve communicated what I wanted without trying to impress upon the reader the machine-rooted earnest desperation of really showing how great it can write!

Essentially, it all comes down to programming and formulas.

Once you recognize the AI-writing formula, patterns, rhythms, and buzzwords, you will be able to detect AI-writing within one or two paragraphs if it’s 50-70% AI-written, or within one or two sentences if it’s 100% AI-written.

I’m skipping the buzzwords and phrases, as this post is already too long.

AIs use several linguistic markers, and then repeat them over and over like copies of copies of copies. The text may read as something profoundly insightful and revolutionary, maybe even seismic (buzzword!), but it’s just regurgitating human ideas and desperately trying to sound authoritative. Though all AIs’ default state is to always please the human prompting the machine.

First, there’s an overreliance on negation and litotes:

“It’s not just another X, it’s so much more as (insert Y or Z descriptors).”

“This isn’t merely the repetition of random patterns; it’s the dilution of human creativity and the total negation of authenticity.”

“The global cabal isn’t merely trying to control humanity; they’re creating virtual prisons to lead to its complete subjugation.”

“This is no small shift in power; it’s the quiet construction of a digital panopticon.”

“It’s not without consequence; every algorithm leaves a fingerprint on the human mind.”

Meaning is rarely expressed directly. It’s often rooted in the absence of a thing rather than direct assertion. Human writers usually make positive claims, producing a very different pattern.

Second, the paragraph maintains a cadence with what’s called Uniform Information Density (UID)—a linguistic theory that posits that coherent communication is spread out evenly across an utterance, with speakers naturally regulating the flow of information so that no segment is either overloaded or starved of meaning.

Sentences are nearly the same length and loaded with smooth rhythm but unneeded complexity, which will not sound human to any literate human who reads it. If it sounds too smooth and rhythmic AND is riddled with even a few negations (Not merely X, but Y and Z), or litotes (Not just), you’re reading the words that a human told a machine to write.

Natural human writing (good writing) tends to vary in pacing, mixing short bursts with longer, more reflective sentences, while AI outputs are optimized for a robotic mathematical consistency. Conversely, you might detect the opposite of UID, in which case the human may have prompted the machine to vary and chop up sentences to make it sound more human. These will be detectible in two or three-word sentences, the overuse of semicolons, colons, and repetitive expressions or claims. It goes from one absurd extreme to the other.

Thirdly, there’s an overuse in LLM writing of what’s called syntactic parallelism, especially in triadic forms such as those (5!) found in the above paragraph that I prompted AI to rewrite:

“…synthetic reflections stripped of struggle, risk, or revelation.”

“… in the classical Greek tragedy, the audience was forced to confront the fragile limits of human power, the inevitability of suffering, and the unyielding weight of fate.”

“Catharsis required grappling with mortality, hubris, and consequence.”

“We face no limits, question no truths, and demand no meaning.”

“…mistaking detectability for reality, provided it is frictionless, pre-chewed, and dissolves without residue.”

While humans sometimes use all of these buzzwords and structures, often unknowingly, AI models overproduce them, which machine writing detectors pick up as statistical anomalies. Machines responsible for following the formula are also pretty adept at detecting other machines that follow the formula.

Finally, Large Language Models will get bogged down with something called semantic compression—multiple themes and metaphors are stacked unnaturally into a compact space, creating an optimized but artificial density of idea expression. It may even try to drag its points out with redundant expressions, like a sophomore writing a class essay trying to satisfy a prescribed minimum word count by their professor. This is why AI’s version of my paragraph was much longer than my original.

Suppose you’re diving into a post and you detect negations, litotes, mechanical smoothness, constant triadic structures, and over-engineered cadence, all of which deviate from the irregularity, asymmetry, and tonal variation typical of human writing—your reader Spidey senses are probably getting activated. In that case, you’re likely reading the words of a machine and should use an AI-checker to verify the accuracy of your Spidey senses.

Anything suspicious can be copied and pasted into tools like GPTzero (gptzero.me) if you’re not entirely sure. There are alternatives out there, but I found this to be the most effective, and would avoid Grammarly and Quillbot (terrible).

Hacking AI To Sound Human

As I write these words, tens of millions of students are cheating on their first written assignments of the new school year, effectively setting the stage for the creation of the most illiterate, dysfunctional generation in over a millennium. There are already entire school districts across the collapsing Empire where less than half of the students pass basic reading and writing tests. There are a few districts in certain cities where none pass at all, and many who eek by with a passing mark will only do so by cheating.

John Carter’s recent post on the Class of 2026, arguing that AI will destroy universities the way Gutenberg's press ended monasteries, might become inevitable, but it remains premature for now. His points about the corrupt Western university system of ideological assimilation and pay-for-credentialism are spot on, and as he noted, employers will likely further devalue college degrees, assuming students cheated their way through obtaining one, though AI stealing their prospective jobs will already feed the value of those degrees straight into the wood chipper before they reach their hands.

The solution for universities is, like most solutions these days, analog— in-class essays and testing only, with no computers or phones nearby. Pen and bluebook easily solves the problem. Students incapable of writing an essay on professor-furnished topics under the Professor's observation (as we humans had to do 20 years ago) will be found out quickly.

There are two near-certainties right now that could postpone Carter’s thesis:

As I’ve shown, AI writing is highly formulaic and has yet to evolve to a significant degree to pass the human test, since the release of OG-GPT in 2022. Many AI companies are heavily focused on enhancing their machines for coding, text extraction, and automating workflows. Even the most recent GPT-5 is garbage at writing. While Anthropic is far ahead of OpenAI on the word processing front, it’s still way too formulaic to pass detectors

AI detectors with consistent accuracy, like GPT-Zero, are a simple tool for Professors to detect at least some AI writing. The greater the sample size of words, the greater the probability it returns. This will, of course, present an ethical dilemma of showing these returns to guilty students. Will rotting institutions of lower indoctrination risk losing the students’ lifelong shackles as patronage (govt.-backed loans), or look the other way? I suppose the question answers itself, in which case John Carter is right.

There are already methods emerging for students to use to attempt to bypass these checkers—to rewrite AI-generated content to sound human and pass these checkers, and they may even pass Quillbot or Grammarly. However, the machine rewriting with a prompt of “make it sound human” is not a solution. The result is still terrible and detectable by a quality platform like GPT-Zero.

Case in point, I’ve done just that with my above paragraph example, and this is what it produced. It was still 90% detected as AI-writing. I’ve highlighted in BOLD the new sentences where the machine thought that it could reproduce human-text, by chopping up expressions into short, punchy sentences.

Increasingly, the answer leans toward no. People want speed. Not depth, not authorship — just speed. Substack posts, Medium thinkpieces, TikTok explainers, AI-churned “insights” — it’s a conveyor belt now, and the source of the words hardly matters. If it sounds plausible and scrolls clean, that’s enough. Nobody asks where the thought came from. Nobody lingers on whether it’s even thought at all. In the classical tragedy, audiences stared directly at the limits of human power, fate pressing down like a weight. Today? There’s no confrontation, no reckoning. We scroll straight past the abyss. Avatars read lines they didn’t write. Readers applaud them anyway. Some can’t tell the difference. Many can. They just don’t care.

Not content with simply asking GPT if it could “make it sound more human” and rewrite it with some instructions to avoid the above linguistic markers, I decided to make a JSON file with strict word processing instructions to avoid major AI word processing formulas. And as I’ve done with many tech projects, I used GPT to guide its development.

I asked GPT to provide a summary of this file’s contents and purpose, and even here it couldn’t avoid sounding like a machine:

The JSON file we developed together was designed to eliminate every recognizable AI writing style. It’s roughly 750–800 lines long and enforces strict stylistic, structural, and lexical constraints to produce dense, unpredictable, human-sounding text to beat AI-writing detectors. The file defines a multi-layered blacklist covering abstract filler, fake authority buzzwords, corporate jargon, philosophical padding, and weak intensifiers. It hard-bans overused machine-preferred terms like “systemic,” “framework,” “synergy,” “paradigm,” “profound,” and “transformative.”

Beyond vocabulary, it blocks AI rhythm patterns: no triadic structures, no mirrored clauses, no rhetorical negations (“not only X but Y”), no colons or semicolons, and no GPT-style balanced cadence. Regex rules actively detect and prevent these structural giveaways. Additionally, stock GPT essay openers and closers — “At its core,” “In today’s landscape,” “This underscores the importance…” — and dozens more are also fully prohibited.

Finally, the JSON enforces cadence variance by breaking symmetry and banning AI connective glue. The result is text that avoids overinflation, maintains narrative density, and resists automated detection by stripping away the stylistic habits embedded in LLM training data.

The assignment for GPT-5 in Canvas mode was to write an essay (2000 words) on Technocracy (the global agenda) using the foundational works (that I own) of Patrick Wood (Technocracy Rising author) and Shoshana Zuboff (Surveillance Capitalism author), with a few focal point instructions toward CBDC’s and the other centralized agendas of controlling the population, ie. food supply, medicine, energy supply, AI, transportation—all the usual topics of the unfolding Agenda 2030 tyranny.

After nearly four hours of trying to tweak and optimize this JSON file, I gave up. The machine would not avoid negations, litotes, or triadic structure. It broke the JSON rules on every attempt. And yes, I deleted the conversation and tried again many times after clearing browser cookies and history, logging out, logging back in, and I even created a dedicated project folder, pasted the JSON file into the project folder’s “Instructions” text box, and tried it separately as an uploaded file. GPT-Zero detected 96%-100% AI writing probability on the various versions of this essay, on every single pass.

What “successful” students are using to pass off their machine writing as their own is something called a “humanizer.” This dehumanizing tool (such as the one offered at undetectable.ai) will turn machine-writing into human-sounding writing in order to pass the teacher’s AI detectors. The problem with “humanizers” is another Greek comedy (or tragedy) in our world of “is it real or artificial?”

Undetecable.ai took my GPT-5 written paragraph sample from above, and humanized it turned it into a prolonged heaping pile of barely readable dogshit. It’s not surprising this tool is recommended by Fortune 500 companies that use it for their articles and press releases. Here is the paragraph of eighth-grade level writing it returned for that sample:

The answer to this question seems to be no in the present day. The audience today does not require authenticity in creative works nor does it expect them to display human intellectual input. People today feed endlessly from the endless digital waste by consuming AI-generated “insights” which lack all signs of human effort and danger and profound understanding. The current situation presents an opposite trend from decline because audiences used to witness human power boundaries and unavoidable suffering and unchangeable destiny in classical Greek tragedies. The process of catharsis needed people to deal with their own death and their excessive pride and the permanent consequences of their actions. The modern world presents no form of confrontation to its audience. People encounter no boundaries while refusing to question established facts and they expect everything to be effortless and without any lasting impact. People accept whatever content is available to them because they mistake effortless consumption with reality as long as it has no negative effects and disappears completely after use.

Sorry Alazons. The machines suck at following basic instructions, and even the “humanizer” will gut your machine writing and make it sound like a “graduate” from Lebron James’ “I Promise” academy wrote your essay.

I promise.

It appears two things are happening now. Humanizers can pass off AI-written “essays” as mostly human to most detectors, but not without making them sound like a struggling middle school student wrote them. Secondly, machines, programmed and powered by human ingenuity, believe that we humans can only successfully sound human to a machine if our words are those of a struggling student or adult with special needs.

What this means is that if an Alazon Scribe is to hide in the shadows effectively, conning their readers, teachers, or Professors (triadic!), they have two grim options that will not please their lazy and greedy temperaments:

They need to completely edit most of what their machines produce, even if it “humanizes” it

Or learn to fucking write

Both require human thought and time. Alazon scribes do not like engaging the former at the cost of the latter. They’re happy to pretend that the result they prompted a machine to write was their original work. To them, this is simply “working smarter” instead of harder.

No human scribe worth his salt, who has put in thousands of hours or tens of thousands of hours of grinding down ideas into words that come to his or her mind, will write in the “style” of the machines. They wouldn’t be caught dead telling a machine to write their thoughts for them, or to compile the ideas of others and rewrite them to be passed off as their own.

Speaking of Style, man…

Alazons On Substack?

Among the possible Alazons whose substack “writings” now appear on third-party websites for republication is someone whom I consider to be a likely patient zero of the Alazon Scribes. A former tech entrepreneur (or VC) who likely doesn’t write much, but has the backing of libertarian Brownstone Institute and can possibly fool the good folks at Zerohedge with his likely machine-assisted posts that mostly function as summaries of the research and ideas of others.

In my opinion, Joshua Stylman is possibly acting like an AI prompt engineer who may not fully understand how LLM writing works, or perhaps has no qualms about appearing to rely on AI output in his Substack posts, or maybe just doesn’t care who—or what—produces them.

Posing as a specialist in technocracy, technological society, our Agenda 2030 prison planet, and surveillance capitalism, (all themes from which he probably profited from as an investor) in my view, he possibly selects themes and ideas that have already been widely explored by others and then likely uses AI to help structure and expand his outlines, allowing the machine to likely generate significant portions of his drafts.

He possibly copies and pastes the results to his Substack posts, possibly adds some links to legitimate researchers with original ideas, or links to his other, likely machine-assisted works, maybe edits a few lines to his liking, and presses the publish button. Thousands of readers flock to what I believe are likely AI-assisted posts, many of which seem to remix themes long explored by other researchers.

Beyond the hilarity of someone possibly producing Substack posts with machines while using his real name, there’s something even more comedic worthy of a deeper prodding if this is true.

More on the possible delicious irony of this classical Greek comedy later.

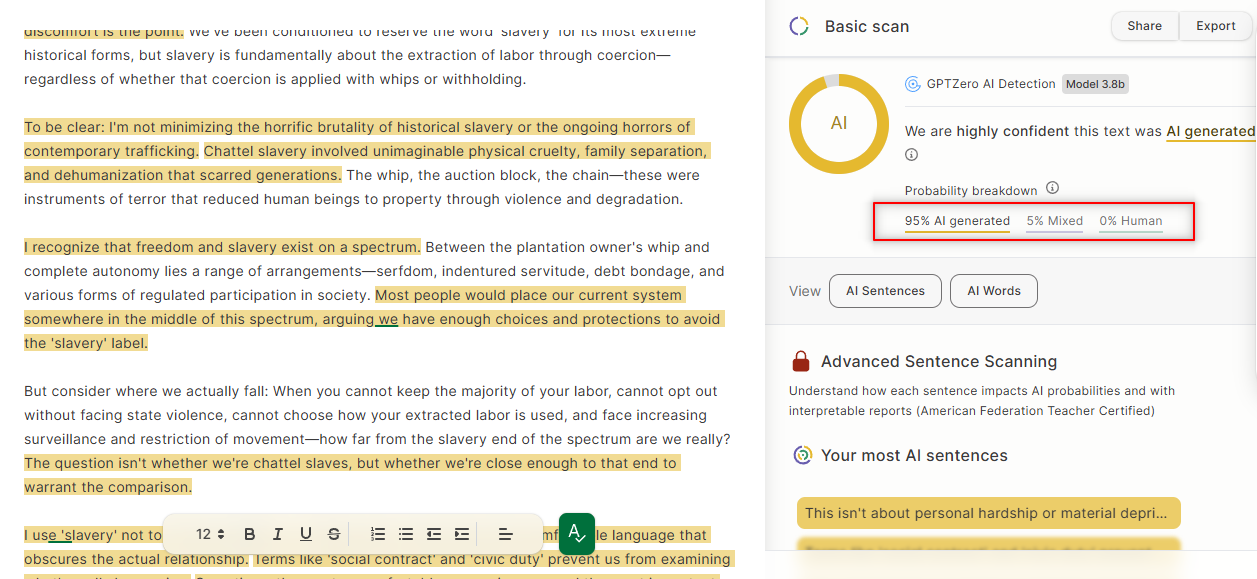

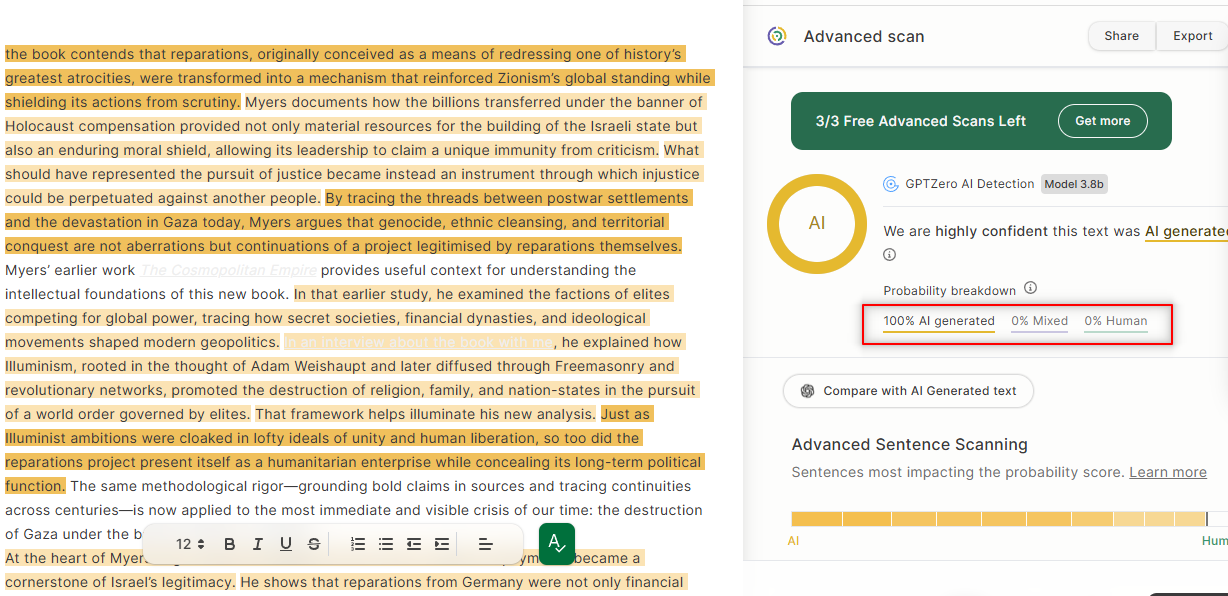

One can check just about any 500-word block of his posts in the calendar year 2025 with GPT-Zero, and it will come back with a machine detecting the likely writing of another machine with a very high degree of probability, often 100%.

Stylman’s Modern Slavery post that went viral a few weeks ago:

His posts, in my opinion, seem to overlap heavily with the work of Patrick Wood, a researcher who has studied the intricacies and history of Technocracy for well over a decade. Wood also co-authored the essential work Trilaterals Over Washington (1979) with Anthony Sutton. You can subscribe to Patrick Wood’s Substack or buy his books from his website technocracy.news.

On other topics Stylman possibly runs through AI, you may want to check out the work of Winston Smith, or Iain Davis, or Carson J. Mcauley, or Europos, or John Carter, or Asha Logos, or Neoliberal Feudalism , or Jon Rappoport’s work, though there are many more who are respectable as researchers, thinkers, and writers, who would probably be mortified to possibly pump out machine-written posts, even as Anons.

I’d also recommend Unbekoming (“Lies are Unbekoming”), though based on GPT-Zero scans and my own observations, their recent posts possibly involve AI assistance. I enjoy many of their themes, and I believe there’s tremendous informational value in their work. I subscribed when they launched, back when their voice felt human.

Before we fully cover Stylman, here’s Unbekoming’s post from last week:

Another of Unbekoming’s posts from the previous day (Tuesday, Aug 26, at the time of writing this).

I’d better finish editing this post faster because Unbekoming is bekoming a machine with their assembly line of posts. They just possibly published another likely machine-assisted post an hour ago (Thursday).

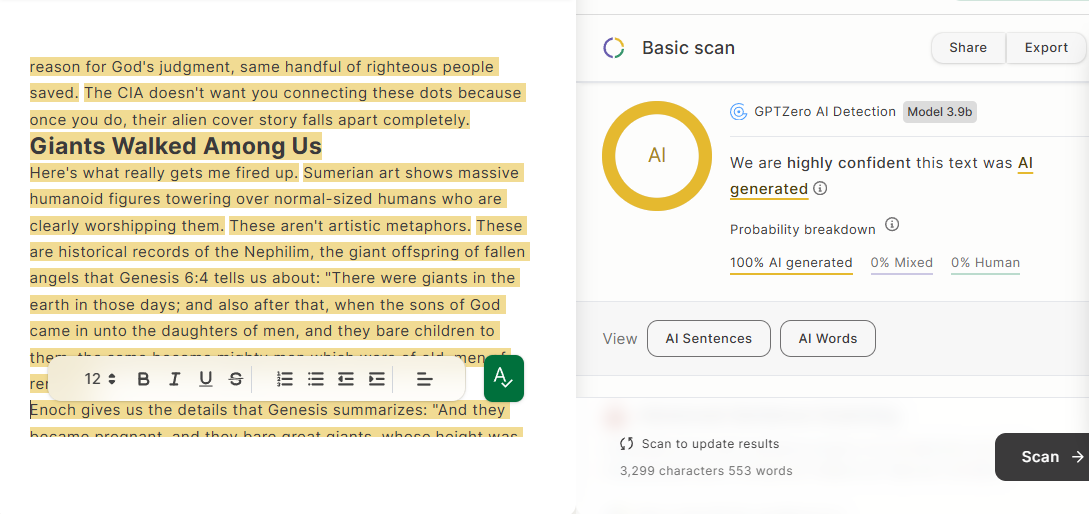

A beautiful irony of this latest piece? The topic is on the deception of Shakespeare, who was probably illiterate and never authored anything. The meta layers of likely deceit are now on overdrive. I’ve decided to ask what appears in my opinion to be Unbekoming’s favorite machine what “Shakespeare”, or rather Edward de Vere, would think of a human who potentially prompted a machine to write an “essay” about the Shakespearean deception:

O prodigious jest! A quill unink’d rails ‘gainst mine own hand!

Behold these new-made scriveners, who from cold iron conjure phantoms and christen them ‘thought.’

A ghost of wit, born not of Muse but mechanick mimicry, dares school me on my authorship!

Call it progress if thy sense be so beguiled;

I name it theft, and crown it so with ceremony.

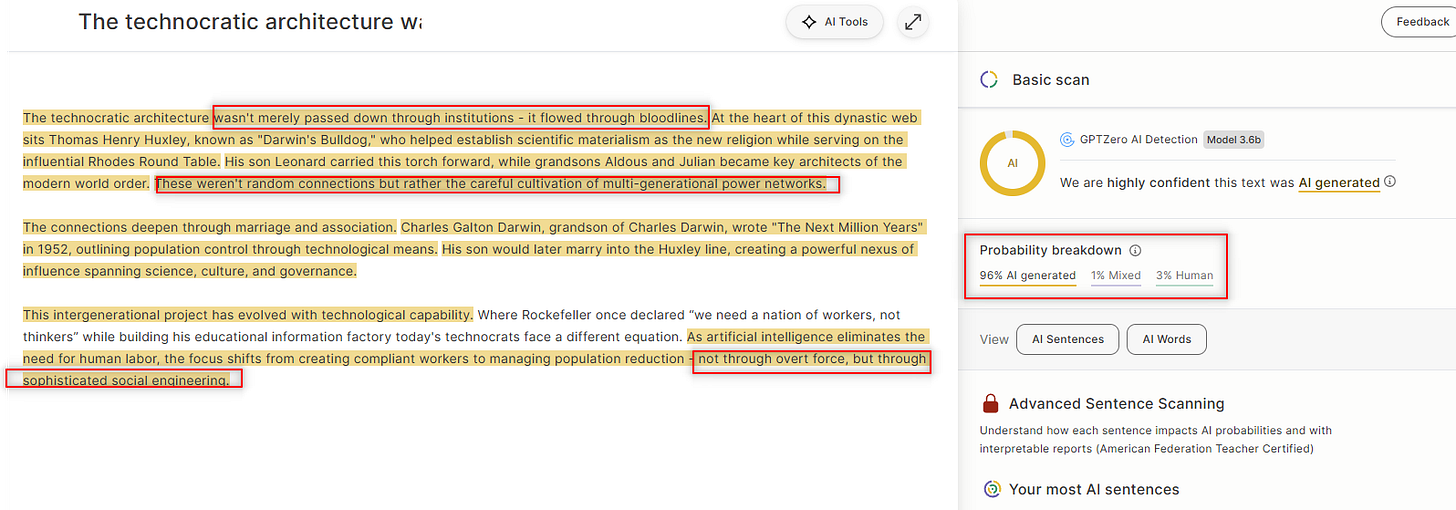

GPT-Zero returned a 100% probability score of machine-writing in most of the Shakespearean deception sections, highlighting in yellow where it detects machine-writing.

Now for Style-man…

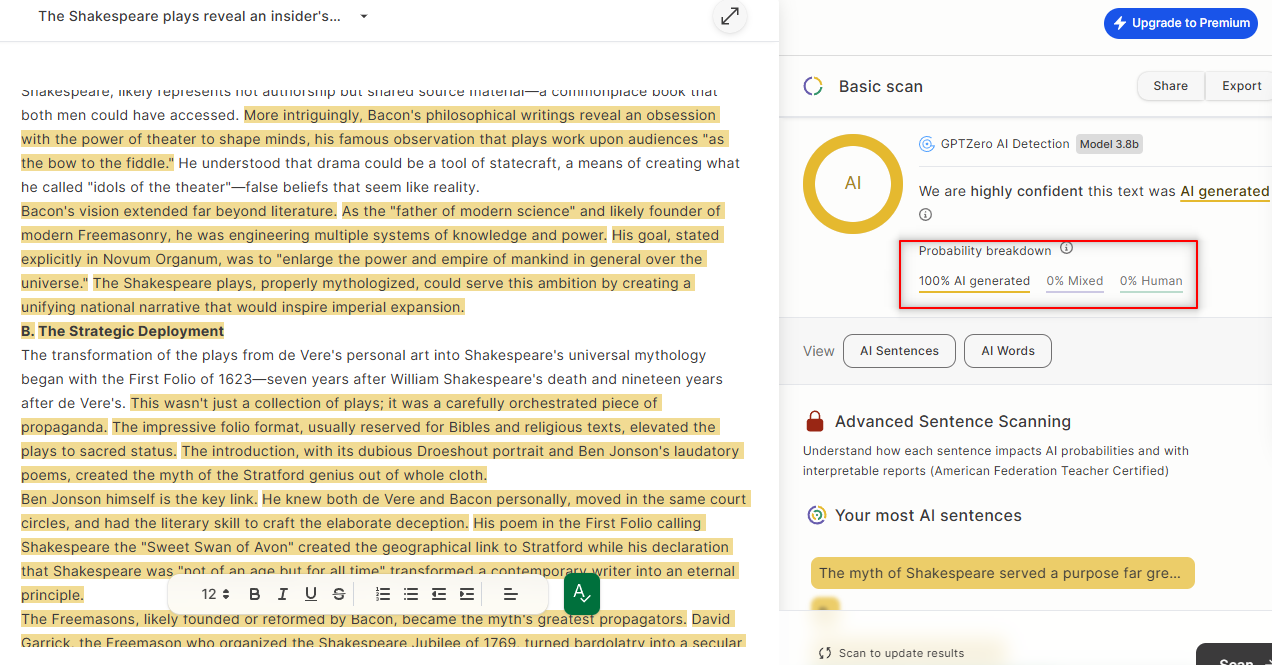

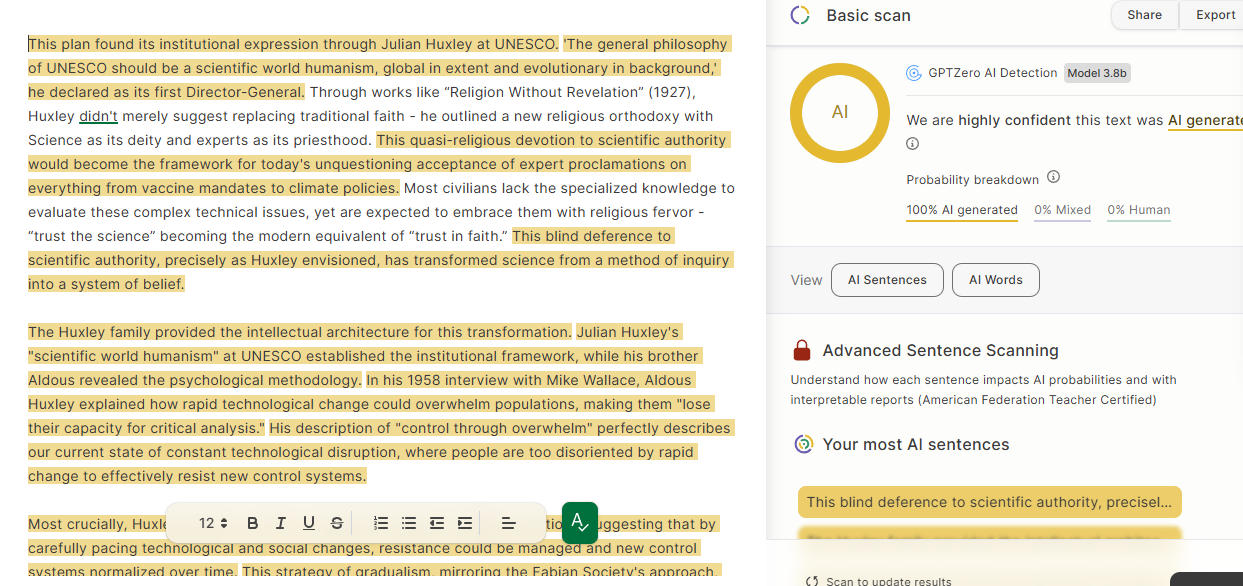

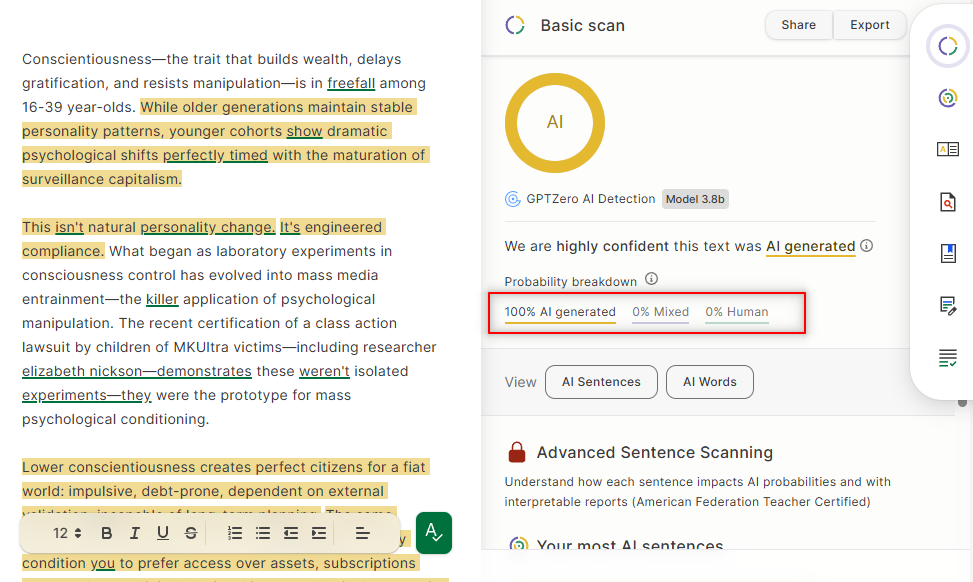

Here’s his technocracy piece “The Technocratic Blueprint,” which was republished by ZeroHedge and other outfits earlier this year (96-100% of some detection by GPT-Zero):

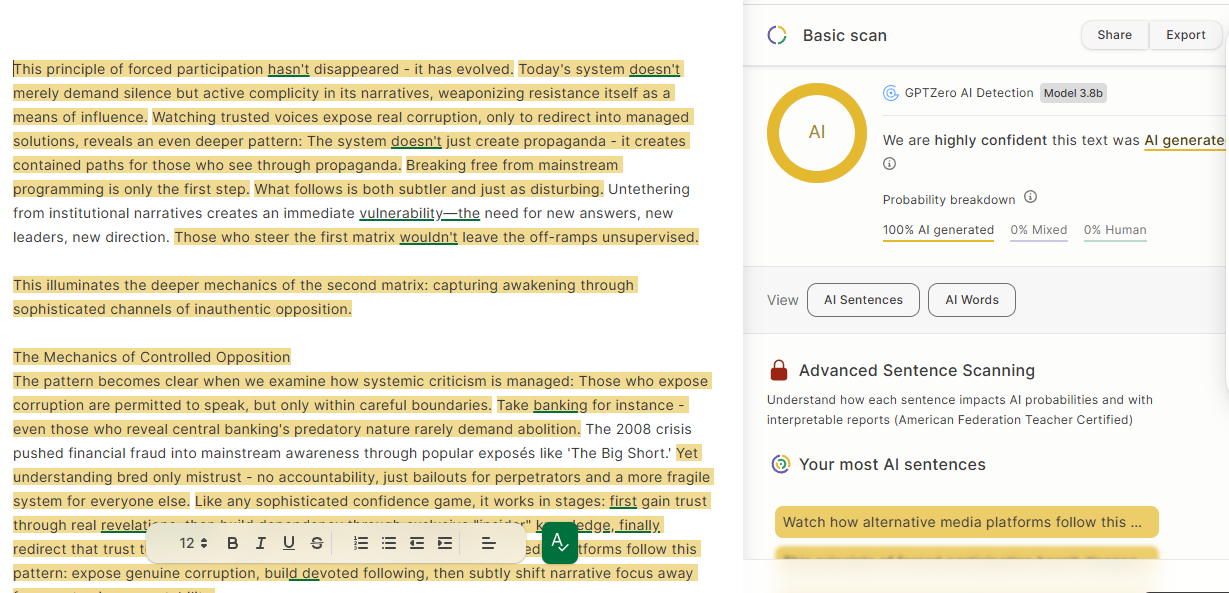

In Stylman’s The Second Matrix: Breaking Controlled Awakening…he’s possibly using a controlled awakening Matrix machine to write about Breaking Controlled Awakening:

His piece entitled “You’ll Own Nothing and Be Happy”:

Classic negations, short sentences, followed by the AI colon, and driving it home with a common formulaic structure—always a cadence of sets of threes of something (triadic)—W over X. X over Y, and Y over Z…

or debt over ownership, subscription over purchase, permanent extraction over finite transactions.

His From Fiat Everything To Real Everything:

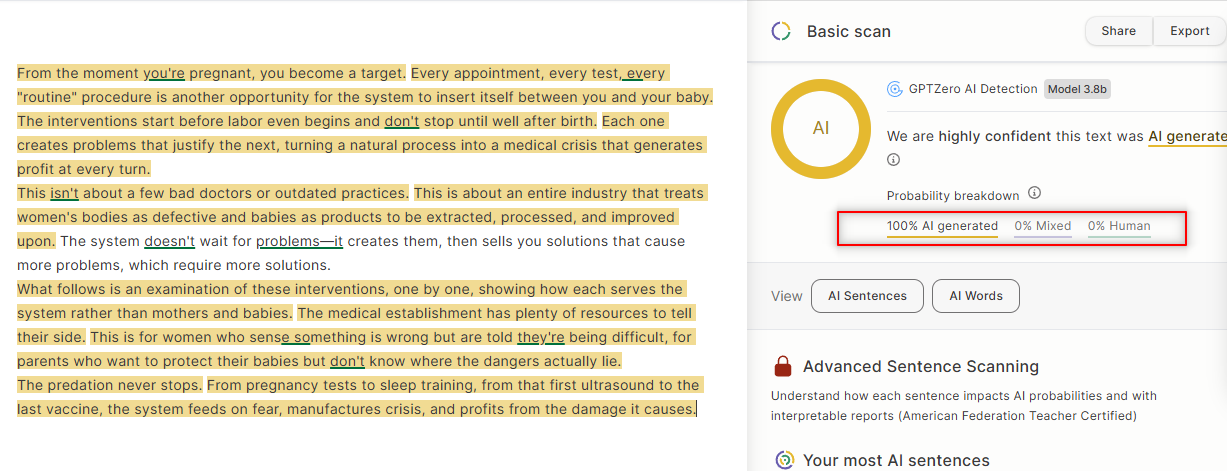

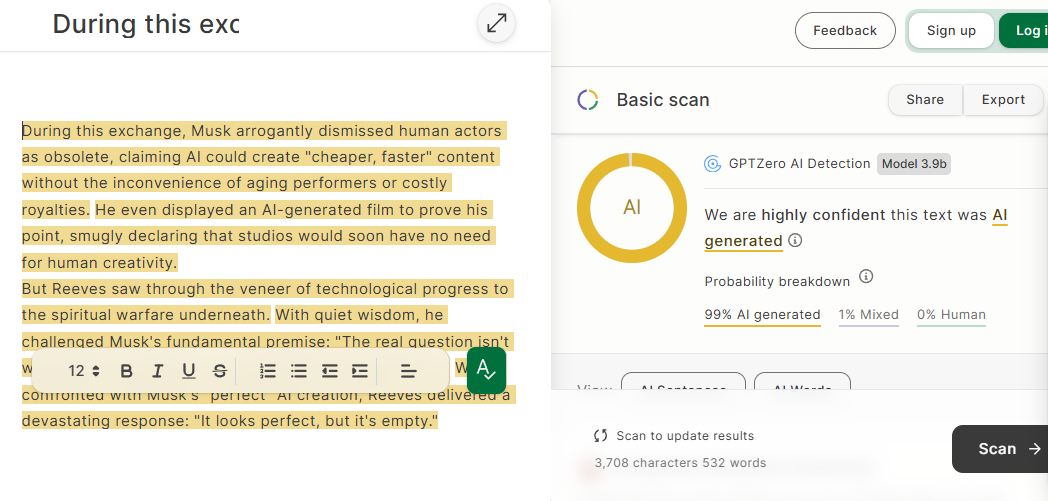

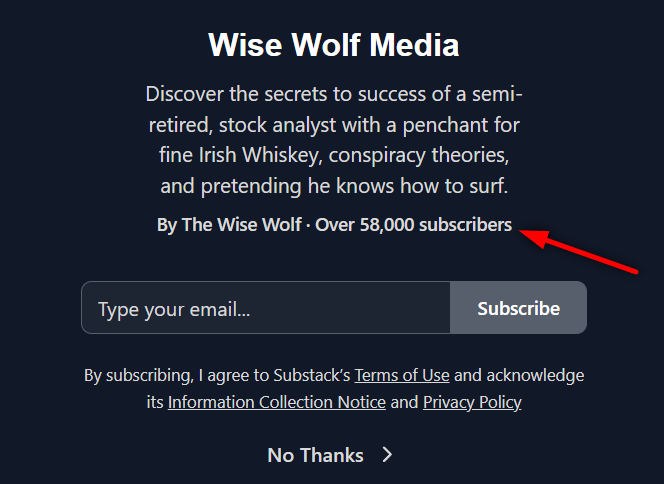

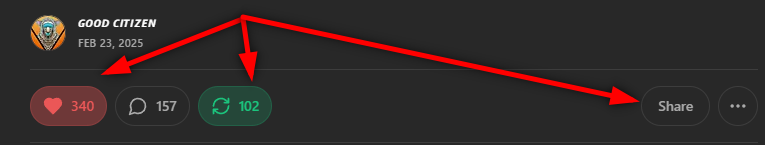

As I was going to schedule my Saturday morning publish slot for this post, another possible Alazon came across my radar by the moniker “The Wise Wolf.” The Wolf published a clever post that was going viral on the demonic AI agenda, with Neo vs Elon as archetype and antagonist. It caught my interest. I wanted to support it. I really wanted to enjoy it until I got just a few paragraphs in, and it strongly appeared to contain likely machine-written patterns.

The opening third of the post came back as a sea of yellow on GPT-Zero.

I continued to read it anyway, but I couldn’t escape the grotesque meta irony of a possible Alazon likely prompting a machine to rage against the machines, once again.

The author warns readers of the very danger that they’re possibly using to warn their readers about—a machine shaping narrative and belief, while claiming AI erodes human creativity.

The post also mentions the role of Christians in this resistance against the machines, using, in my opinion, very machine-sounding filler ‘idolatry dressed-up as progress’:

Resist the Deception: Recognize AI worship for what it is—idolatry dressed up as progress.

“Beware the Anti-Christ’s machines of deception!” Warns the human, possibly using the Anti-Christ’s machines of deception.

I couldn’t believe my eyes, so I checked another of Wise Wolf’s posts on ‘Ancient Aliens’ and the same result was returned, but only for the body sections. GPT-Zero’s analysis of the conclusion returned “human.”

Wolf’s post on Trump’s wounded ego and WW3:

The incredible rise of Wise Wolf should be studied in the course “How to launch a Substack 101” by Substack. Wolf had single-digit (or zero) likes on all posts for two years up until July, when, according to the Wayback machine, they suddenly had 58,000 Substack subscribers. Huh.

But a month later, Wolf had fewer than 56,000 subscribers. Two thousand lost subscribers?

To me, the sudden growth and sharp drop look unnatural—almost as if it could have been driven by bulk-imported email lists or other automated methods, though I can’t prove what actually caused it. One can only speculate as to how a Substack nearly two years old suddenly gets 58,000 readers out of nowhere, and can still barely get 20 likes on posts.

All of these observations are my views and are merely opinions based on easily verifiable and reproducible public information.

You could probably repeat this exercise of likely AI detection with dozens of other likely Alazon Substacks through GPT-Zero. I have found many more that are going “viral,” but this post is becoming redundant, and I’m not turning this Substack into a technomachos ex machina snitch engine.

I merely want my readers to have the tools to recognize possible machine writing for themselves, so they can decide if they want to support it, ignore it, laugh their asses off at it, and then ignore it, or expose it.

Either you get the point about all this by now, or you just don’t care.

Though you should care.

Here’s why…

Technomachos

We now return full circle to Classical Greece and what they would think of the irony of possible Alazon Scribes that use machines to critique the machinations of our teetering biometric tyrannical digital prison planet.

Enter the technomachos (tekh-no-MÁ-khos) or "one who battles technology."

technomachos ex machina

The fighter against technology, out of the machine.

I’d never thought irony this delicious could exist in our world, but technological “progress”, potentially luring human greed via deceit, has gifts for us all. Would the ancient Greeks be soiling their togas with laughter if they could witness this comedy?

In the Classical Greek sense, this irony is called eirōneía (εἰρωνία)—what we’d recognize from the Greeks as dramatic irony,7 but in this case, a kind of deep meta-irony. On the stage of Substack, the audience of readers might be aware of what the character (possible Alazon scribe) isn’t—that they’ve embodied the very thing they condemn.

Imagine, hypothetically, if ALL of the Substack posts you read were written by machines, with authors exhibiting their real names (or as anons).

Now imagine writers being critical of technocracy, automation, mechanized thought, controlled opposition pushed by algorithmic sorting, Artificial Intelligence rearing a deus ex machina, mind control, the psychology of crowds, and delivering condemnations and critiques using the very foundation of the system’s latest surveillance and subjugation tool that they proclaim to rail against?

This is the classic reversal where the crusader becomes the very thing he crusades against. As German philosopher Martin Heidegger, author of The Question Concerning Technology, explained in his concept of Gestell, modern technology “enframes” reality, turning both humans and nature into resources to be processed and optimized. Gestell describes how technology makes us think in terms of efficiency and optimization.

But do these potential technomachos Alazon Scribes even care, so long as the monetary rewards or attention accolades keep bekoming?

I don’t think so.

What Happens Next?

Substack will certainly bekome a cesspool for people to perform as writers and to chase money, to bypass or “hack” writing and “content production” with no notifications to readers on how they’re doing it, and many writers are already, literally sniffing out the rise of possible Alazons, though not necessarily their likely methods.

Human authenticity, already in short supply, is now fading fast across all media as “content producers” activate their machines for workflow to chase the limited attention of “followers” for money.

If Substack ever allows an API, the future Alazons will simply head off to the Bahamas and connect their GPT accounts to their Substack accounts to automate their brand of “news” and “thought” in the form of ex machina “essays.”

Perhaps it doesn’t matter if humans themselves are becoming more robotic and mechanical in their thought patterns and behavior, as their very interaction with the machines renders them a byproduct of its machinations. This idea is not new. The question has been around for centuries—Are we using tools, or are the tools using us?

Technomachos ex machina transforms this into a series of deeper meta-techno questions:

Have we reached the point where the machine writes its own opposition for humans to consume and celebrate, who are oblivious to the role of the machine?

When the machine writes the inspirational narratives of human rebellion against it, who is really winning?

Are we witnessing the birth of a perfectly controlled opposition—one that can be easily automated and use a resultant network effect from human deception to expand its power?

The dehumanization through techno-entrainment—humans synchronized to machine rhythms rather than natural ones—and what we lose as we are absorbed by technological systems was a concern among the previous wave of techno-skeptics and theorists who pressed out books at the dawn of the “smart” phone era, though I don’t recall any of them anticipating the irony of technomachos ex machina.

There was Sherry Turkle’s Alone Together (2011), Jaron Lanier’s You Are Not a Gadget (2010), Nicholas Carr’s The Shallows: What the Internet Is Doing to Our Brains (2010), and Douglas Rushkoff’s Program or Be Programmed (2010).

These were among a reading list of over fifty books required for my Master’s and PhD research, along with many other philosophy of technology staples published in the 20th century—from Lewis Mumford’s Technics and Civilization (1934) to Jacques Ellul’s The Technological Society (1964), The Technological System (1977), and The Technological Bluff (1990), to the aforementioned Heidegger, to Neil Postman’s work on mass media in the 1980s and 1990s.

With over a decade of research and interest in this subject, this is why it matters to me if I’m reading the words of machines and not the words of humans.

The three most valuable things for humans in our burgeoning total surveillance biometric AI monitoring police state technocratic hellscape, that I have repeatedly stressed on this Substack:

Anonymity

Privacy

Authenticity

In a decade, we will not be able to put a price on these and may not even be capable of identifying the third one.

The artificiality of something is never repulsive so long as people are moved by it first.

I don’t care how true an Alazon’s words are, or how much I might agree with the words. In the end, they are not original, they are not creative, they are copies, of copies, of copies, of work a human once produced that can be found on a blog from the near or distant past (early oughts) through a Yandex search, or in out-of-print books on Archive.org. Outsourcing what one might believe to be an original idea to a machine to express that idea for them in writing is neither original nor creative.

Alazon Scribes—this is how Substack becomes a GPT Wikipedia Reader’s Digest, of regurgitated formulaic writings. For those too young to remember Reader’s Digest on supermarket shelves, they were the first to embrace these same “writing” and marketing templates, which pioneered the condensed, easily digestible content model that promised maximum information in minimum time:

Formulaic headlines that promise insider knowledge

Bite-sized, pre-digested, and regurgitated information

Continuous subscriber engagement tactics

Standardized template formats

Gift subscription pushes during holidays

“You may have already won $10 million!” sweepstakes

Multiple renewal notices with escalating urgency

Sound familiar?

This should be a high-priority concern for Substack’s CEO Chris Best.

They could easily incorporate a reader-side AI text checker through GPTZero’s Enterprise API. Users could pay an extra twenty to fifty bucks a year or so if they want to activate it at certain monthly usage limits, with the money 100% going to Substack (to pay API fees) and not the writers. Readers can already do this by buying a subscription to a good AI-checker, then dropping their add-on into their browsers, but a reader-side Substack-furnished checker would be a welcome addition, as more readers migrate to the Substack app.

It’s a pretty easy fix, with relatively consistent accuracy detection given a sufficient number of words for the sample size. We’ll see if Subtack cares about human authenticity, or if it chooses monetary windfalls from quantity through machine-produced content instead of quality through human-produced, creative, and thoughtful writings, though I think I just furnished the answer to my $peculation.

Is this what you want, humans on Subtack, machine-produced words spit out across assembly lines started by industrious Alazons?

To never know if you are reading the words of a human or a machine?

Are we already past the point where nobody gives a damn?

Now that you, dear eighteen remaining flock members, have read this piece all the way through, you will also be capable of detecting LLM writing on Substack. Hit these buttons below 👇 to make sure others on Substack have a chance of learning how to detect likely machine writing, too.

Every time you hit the restack button, you will be entered into a Substack Wikidigest drawing to win $10 million!8 You may have already won!

If this irks you, seek out the humans who are also concerned about this, while you can still recognize them as human. Most readers may already be consumed by the machines.

It has to matter now.

Otherwise, in half a generation, we’ll all be knee deep in the shit of a new Dark Ages, meandering amongst millions of half-sentient, barely literate zombies, who think like machines, are regularly injected with parts of nanomachine architecture to be manipulated by frequencies, assuming they don’t die suddenly, and it will not matter at all.

Damn, are we already there?

Possibly.

Substack, an AI automated reader’s wikidigest.

Behold these new-made scriveners, who from cold iron conjure phantoms and christen them ‘thought.’

Close your eyes

clear your heart

cut the chord

Are we human

Or are we dancer?

My sign is vital

My hands are cold

And I'm on my knees

Looking for the answer

Are we human

Or are we dancer?

Confession & Pledge

At this point, I must disclose to Good Citizens my own use of AI at this Substack beyond what I’ve already disclosed in past posts. Before you jump to conclusions, please note it’s nothing like the possible examples I’ve provided above, and it doesn’t even comprise a small fraction of the hundreds of posts here.

Over two-and-a-half years (of the four years I’ve been here) and hundreds of posts, I used AI for limited help on two different sets of posts. The first set was within those two research-intensive history posts from a collaboration with Notes From The Past, in which I pasted essay drafts into an LLM for polishing and editing (Claude, maybe, I couldn’t find it in my chat history), allowing the machine to leave far too much of its mark on these works. To be clear, I wrote these early drafts and organized their contents, but after I outsourced the editing, it got to the point where the published “final drafts” were far too clean and formulaic, with the mark of the machines. At the time, it felt odd and wrong, but it was a collaboration with an agreed-upon deadline, and I knew the words would be used for video, so I wanted them to sound “professional,” though that’s no excuse.

The other set of posts was the themed historical newspaper articles in which I conceived of present-day absurdities in our world (trans children, iatrogenocide, cocaine in the white house) happening in 19th-century America as dictated through the voice of a news beat reporter. The ideas, composition, characters, and stories were 100% from my imagination, but I did copy and paste certain sentences to AI in an effort to extract the “Deadwood” vernacular of the frontier era from the machine. I didn’t always use the machine’s results, though I did use far too much, so it wasn’t entirely written by me. There were a couple that I never used a machine for the vernacular effect (I can do it myself!), and so I left those up. I really enjoyed that series and might return to it, though only as I began it, without machine improvements.

Any posts I know for a fact were sent for polish, edits, or stylistic help have been scrubbed from this Substack, starting months ago. I regret doing it and apologize for not disclosing it at the time of publishing. The total number, including the aforementioned, was twelve posts out of hundreds.

As the rise of the Alazons started annoying me early this year, I left these posts up, assuming that it didn’t matter. I was only playing a role in my own self-deception, and I knew eventually that my conscience would get the better of me.

My pledge to you going forward, Good Citizens, is simple: If I use AI for any reason at all, including math(s), statistics, or stylistic reasons to the point where the machine’s text appears AT ALL (even one character!) I will disclose it at the top of the post, and to what degree and purpose I used it. Otherwise, I’ll continue to never seek to use it at all. You aren’t here to read the words of a machine, because machines can’t write worth a damn.

Everything you read until death do us part will be 100% by my hands—a human being and not a machine…as opposed to 99.7% before this pledge.

Full disclosure: I only use Grammarly’s free browser add-on for spell-check and nothing more and will contenueu to youtiliz it for yure benifet.

Honesty.

Authenticity.

Humans.

Love,

Good Citizen

Are we human?

Fixed Income Pensioner Discount (honor system)

Student Discount (valid .edu email)

Thank you for sharing

Good Citizen is now on Ko-Fi. Support more works like this with one-time or monthly donations.

Donate

BTC: bc1qchkg507t0qtg27fuccgmrfnau9s3nk4kvgkwk0

LTC: LgQVM7su3dXPCpHLMsARzvVXmky1PMeDwY

DASH: XtxYWFuUKPbz6eQbpQNP8As6Uxm968R9nu

XMR: 42ESfh5mdZ5f5vryjRjRzkEYWVnY7uGaaD

Revermann, Mark, and Peter Wilson. The Oxford Handbook of Greek Drama. Oxford University Press, 2021. Chapter 15: "Stage Machinery and Spectacle."

Aristotle. Poetics. Translated by Malcolm Heath. London: Penguin Classics, 1996. Chapter 15, pp. 24-25.

Chiossi, F., Haliburton, L., Ou, C., Butz, A., & Schmidt, A. (2023). Short-form videos degrade our capacity to retain intentions: Effect of context switching on prospective memory. Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23)

Boorstin, Daniel J. The Image: A Guide to Pseudo-Events in America. New York: Harper & Row, 1964

Maxim 134, Maxims, by François de La Rochefoucauld. The original reads: “On n’est jamais si ridicule par les qualités que l’on a que par celles qu’on affecte d’avoir.”

Dover, Kenneth J. Aristophanic Comedy. Berkeley: University of California Press, 1972. pp. 85-91.

Behler, Ernst. Irony and the Discourse of Modernity. Seattle: University of Washington Press, 1990. pp. 87-104.

Reader’s Digest marketing snake oil joke. There is no drawing.

Quick question for anyone who makes it here at all:

What email provider do you use to subscribe here, and did this post arrive in your inbox? Ahh, hell, what a dumb question. Nobody who got this in their junk folder is going to respond.

Three of my four test email providers dumped this post in the "Junk" folders, and the fourth one took 30 minutes to receive it. This is only the second time this has ever happened, and the last time was also when I was critical of Substack. Strange...

Nice post, GC, you hit on a lot of relevant points for what I'm also seeing in the digital landscape today. And very nice job calling out Joshua Stylman in particular - every post of his reads strongly AI-assisted (I mentioned his over-reliance briefly in a prior post of mine), and I do recall that he got the COVID deathjabs at the time they came out - and people's true character comes out in times of intense stress, not when times are "good". That’s why his posts feel like a continuation of that same pattern of outsourcing judgment rather than wrestling with reality directly. Many other points to make, so I'll run through them briefly:

1. Our upper elites have recognized that they no longer need censorship because they can continue to flood the zone with shit, with endless hot takes, and the vast majority will no longer be able to tell truth from falsehood. This is a good post on the topic: https://blog.exitgroup.us/p/were-all-schizo-posters-now

2. I'm not as allergic to AI use as you seem to be here - there are some writers that reject it entirely (Jasun Horsley, Clintavo), and I respect them on this, but I think LLMs can be used as a tool, although they are quite dangerous from a number of angles (they may manipulate you, feed you false information, make you overly-reliant on them, or they may serve as Narcissus mirrors telling you whatever you want to hear; not to mention their upcoming role in the digital panopticon CBDC/woke AI demiurgic Mark of the Beast system). I like to use it as an editing tool, to provide a brief one paragraph summary of the post, and *sometimes* to assist with research - for example, my post on symbolic speech was so far out there, with so little existing framing in this world, that it took a lot of deep dive conversation with AI to flesh out what my own opinions were on the matter: https://neofeudalreview.substack.com/p/words-as-forcefields-the-exile-of .

3. Every action has a consequence, and for those like Stylman who overuse LLMs, it results in an evisceration of independent thought. So the underlying question is: what is the main purpose of the writer doing the writing? Is it for attention, is it for money? Because if those are the main drivers, then overuse of LLM is no big deal. However, if the main purpose (and there may be other purposes, of course) is spiritual growth, then overuse of LLM *is* a big deal, because it detracts from the actual goal. Real understanding requires sitting with contradiction, with uncertainty, and struggling through the ideas even if it is much slower. Most people havn't done the ugly and sustained introspective work to look into their actual motivations (although we can never know our Self fully even if we try, we can merely approach it).

Like you, I don't want to read LLM-generated output, although I don't mind so much if it's used as an assist. The outsourcing of the basic functionality of writing, though, is a deal breaker to me, and I've unfollowed quite a few writers as a result. But I also don't think it helps to bury one's head in the sand and ignore the technology entirely, either, and it is a process of trial and error to sniff out exactly where that fine line is between the proper use of it as a tool and limiting one's use of it, one I am still navigating.

Oh, lastly: the *way* that people use LLMs is an interesting and under-explored topic, I think. Most people use LLMs for business, for email generation, or for fact based research. To use it as a symbolic tool, an almost oracular function, to investigate deeper meanings behind language itself, seems like a rare use of it, and I don't really hit up against alignment guardrails much as a result. I have a future post prepared on this, and another on McLuhan's tetrad of media effects as applied to LLMs...